What Building Products Taught Me About AI

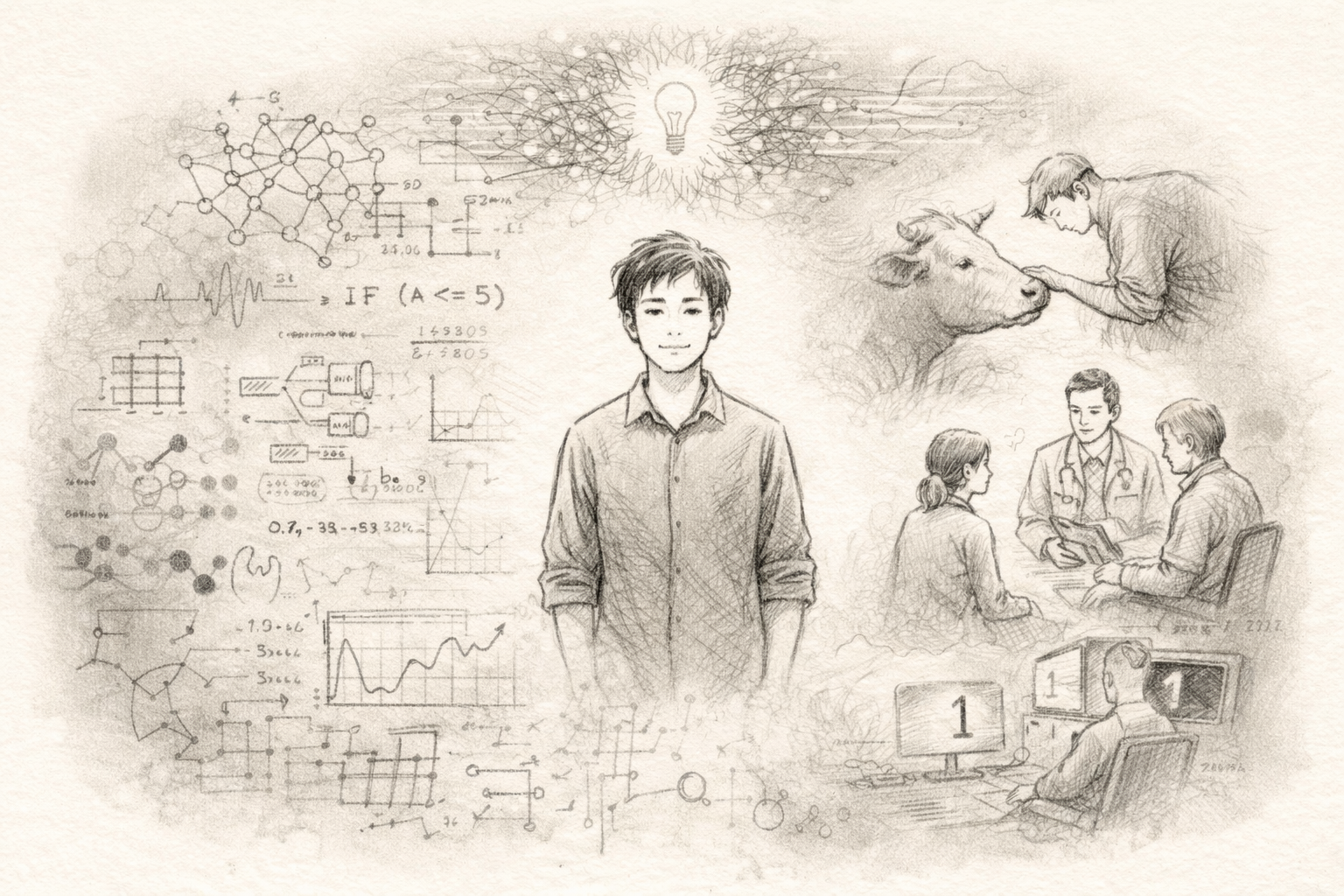

I’ve spent the last decade building products across wildly different domains - drug efficacy predictors, neuromorphic cameras, liquid staking protocols, cancer management tools. Each time, I thought I was learning about the specific technology. Looking back, I was learning something more universal.

Now, as AI reshapes every industry I’ve worked in, those lessons feel more relevant than ever.

The Technology Is Never the Hard Part

When we built a health tracker for farm animals in Singapore, the machine learning was straightforward. The hard part? Convincing farmers that a device attached to their livestock wouldn’t hurt the animals.

When we built cancer management software, the data pipeline was complex but solvable. The hard part? Understanding that patients didn’t want more information—they wanted less anxiety.

AI products will face the same challenge. The models will get better. The real question is: do we understand the humans who’ll use them?

Abstraction Hides Complexity (And That’s Often Good)

In Web3, we spent months building staking infrastructure - validators, smart contracts, reward calculations across 25 different blockchains. Then we wrapped it in an API that all customers used primarily to check one number: Their Yield.

The best products don’t expose complexity. They absorb it.

This is what good AI products will do. They will not let the user realize that there is AI at work!

The Best Products Change Behavior, Not Just Outcomes

When I worked on military surveillance using neuromorphic cameras, we could detect 6 classes of vehicles with 36% better accuracy. Impressive metric. But was that the thing changed behavior?

Battery life extension did. The military no longer had to go in every 2 weeks to replace batteries. The batteries could go on for 1 year.

How did we do that.

- All of the machine learning happened on the chip.

- The camera only woke up when there was activity.

- The camera only sent out 1 of the 6 classes that was detected at precisely the time it was detected.

Not more data. Better decisions!

AI tools that dump more information on humans will fail. AI tools that help humans make better decisions will win.

Questions I’m Sitting With

- How do we build AI products that augment human judgment rather than replacing it?

- What’s the product management playbook for capabilities that improve at a blazing speed?

- How do you design for trust in systems that can’t fully explain themselves?

I don’t have complete answers. But I suspect the builders who ask these questions, rather than racing toward maximum automation, will create the products that actually matter.

Building something in AI? I’d love to hear what you’re learning. Get in touch.